[ad_1]

Large data centers are packed with servers, storage, switches, and various other hardware, trying to keep up with the latest technology trends. Of all the changes occurring within the data center, one technology continues to be a go-to for storage connectivity. Fibre Channel has been a mainstay in the data center for many years based on reliability and security. However, it’s not only a first-round pick for the enterprise market. Many hardware and software vendors focus their tests on Fibre Channel to ensure products are ready for prime time prior to customer shipping.

Large data centers are packed with servers, storage, switches, and various other hardware, trying to keep up with the latest technology trends. Of all the changes occurring within the data center, one technology continues to be a go-to for storage connectivity. Fibre Channel has been a mainstay in the data center for many years based on reliability and security. However, it’s not only a first-round pick for the enterprise market. Many hardware and software vendors focus their tests on Fibre Channel to ensure products are ready for prime time prior to customer shipping.

Dell PowerStore with Fibre Channel

Storage and switch vendors continue to add new features and enhancements to Fibre Channel, and software developers test Fibre Channel functionality before releasing software updates to the masses. Fibre Channel is still the predominant SAN technology in large data centers. Most VMware customers use Fibre Channel to take advantage of the ongoing innovation and existing reliability, resiliency, security, and speed in place today.

VMware Is Committed To Fibre Channel, And Marvell Leads The Way

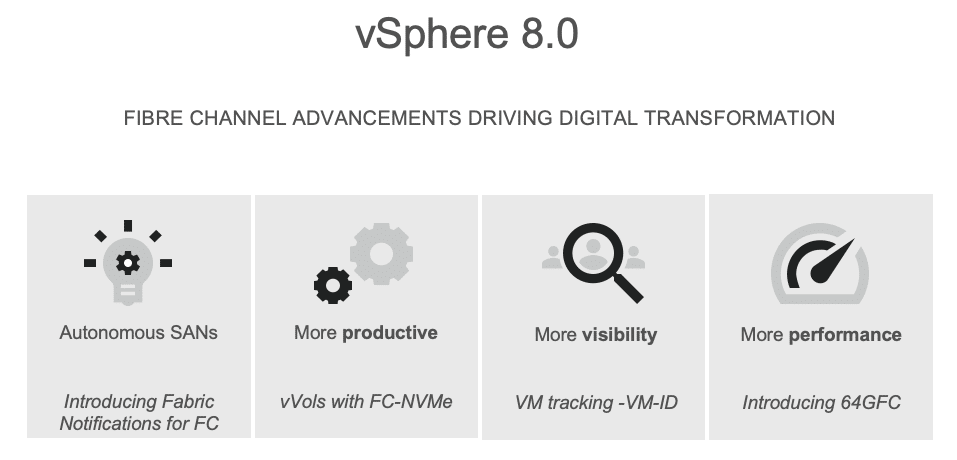

If there were any questions about the importance and significance of Fibre Channel technology, VMware puts that to rest by prioritizing new release tests with Fibre Channel. With all the latest announcements at VMware Explore, vSphere 8 was the most interesting. VMware continued the vVols storage engineering focus and added vVols support in NVMe-oF.

Marvell QLogic QLE2772 Dual-Port 32Gb FC Adapter

As stated on the VMware site, “Initially, we will support FC only, but will continue to validate and support other protocols supported with vSphere NVMe-oF.” The consensus at VMware is that customers might be shifting to NVMe-oF, iSCSI, and 100G Ethernet, but FC is preferred when it comes to high-performance and mission-critical applications. Therefore, Fibre Channel will continue to be at the forefront of technology advancements in vSphere. Although there is a shift to other technologies, VMware does not see a decrease in demand for Fibre Channel.

And it’s not just VMware. With all the buzz around automation, machine learning, and DevOps, Fibre Channel is still top of mind for most IT professionals. Because of their inherent reliability, security, and low latency, SAN Fabrics are critical to industries like healthcare, finance, manufacturing, insurance, and government. Of course, Fibre Channel technology isn’t standing still and continues to evolve with 64G fabrics shipping today and a roadmap to 128G at progressive switch and HBA vendors. Marvell QLogic has continued to demonstrate unparalleled leadership and innovation around Fibre Channel technology.

There is a reason Fibre Channel is the go-to technology for Storage Area Networks: it just works. There is a strong commitment from the vendor community to work together to advance the specifications to meet the changing demands of the industry. Testing by software vendors focuses on interoperability as well as security and reliability, and that is evident in the case of VMware vSphere 8. Vendors are committed to ensuring interoperability with Fibre Channel before releasing new updates or hardware into the data center.

FC Gets a Boost in vSphere 8, Bolsters FC-NVMe Implementation

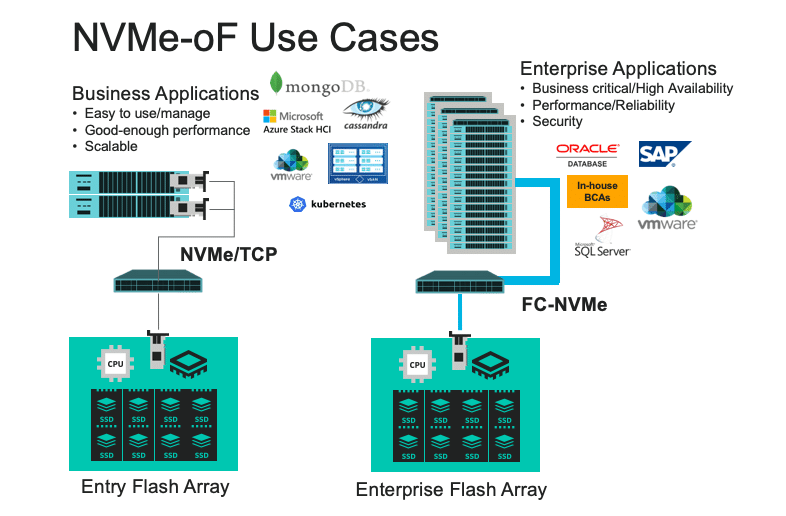

VMware introduced several core features in vSphere 8, including enhancements to NVMe over Fabrics (NVMe-oF). Of course, there are several reasons for the increased popularity of NVMe-oF. Primarily, customers enjoy the high performance and throughput associated with NVMe-oF over traditional SCSI and NFS. Storage vendors are moving to NVMe arrays and have added support for FC-NVMe across their product lines.

VMware took the cue and made significant enhancements to vSphere 8 specific to NVMe-oF, including:

- Increased number of supported namespaces and paths for both NVMe-TCP and FC-NVMe. vSphere 8 now supports 256 namespaces and 2K paths with NVMe-TCP and FC-NVMe.

- It extended reservation support for NVMe devices for customers using Microsoft Windows Server Failover Cluster (WSFC) support for application-level disk locking. Initially, for FC only, this allows customers to use Clustered VMDK capability with Microsoft WSFC with NVMe-oF Datastores.

- Advanced NVMe/TCP Discovery Services support in ESXi to help customers with NVMe-oF setup in an Ethernet environment. Support for NVMe Advance Discovery was added, enabling vSphere to query the network for supported NVMe array controllers simplifying the setup. NVMe/TCP has leveraged the automatic discovery capabilities that Fibre Channel has supported since its inception.

The Four Pillars Driving Digital Transformation With Fibre Channel

Autonomous SANs

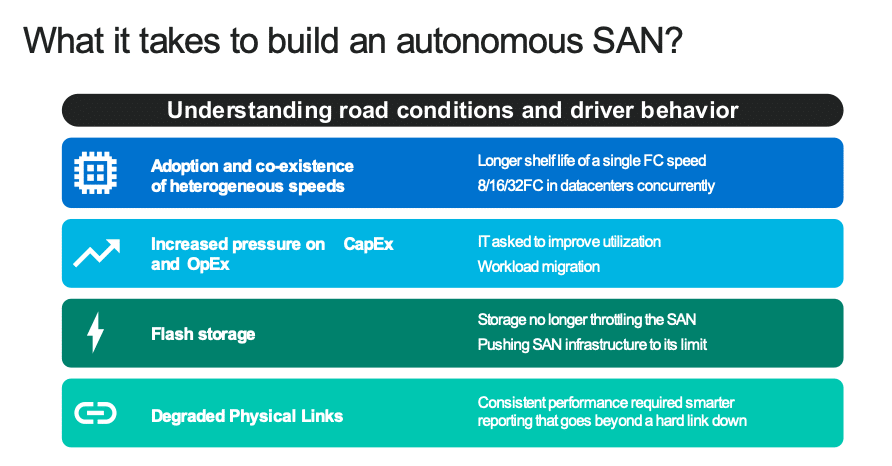

The buzz around autonomous everything is getting reasonably loud. Since we are talking SANs, specifically Fibre Channel SANs, this would be an excellent place to highlight how the Autonomous SAN works without getting caught in the weeds.

Autonomous SANs are essential because they support major industries like healthcare, finance, government, and insurance. Fibre Channel SANs are heavily deployed in these industries because of their inherent reliability and security.

An outage or downtime in these critical industries can be catastrophic. Depending on the duration, an outage could result in hundreds of thousands or millions of dollars in lost revenue. In healthcare, an outage might result in delaying a procedure or the inability to perform emergency services. With this in mind, the Fibre Channel industry standards body continues to pursue improvements in reliability and availability.

To meet the demands of the modern data center and advanced applications, industries are configuring devices to perform tasks not originally intended. It is not enough to have intelligent devices. Today, it is necessary to have an installed base that is aware of everything around it. This is referred to as “collaborative intelligence,” where devices are not only aware of activities taking place but also have the ability to take action when necessary.

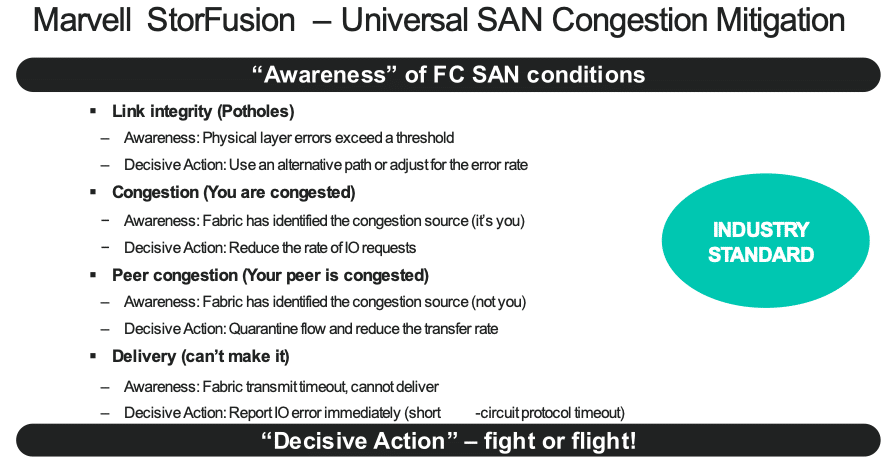

The first phase of the autonomous SAN effort is to develop an architecture element called Fabric Performance Impact Notifications (FPINs). Fabric Notifications creates a mechanism to notify devices in the network of events occurring in the fabric that will assist in making resiliency decisions. Two types of error conditions could occur. Logic errors can often be recovered through retry or logical reset and are relatively non-disruptive to the system. On the other hand, physical errors often require the same sort of intervention to complete the repair. Intermittent errors are more difficult to resolve and can be time-consuming.

With Fabric Notifications, the fabric (or end device) detects the intermittent physical issue, monitors to see if the error is persistent, and if so, generates a message that is sent to the devices affected by the event. With this information, the multi-path solution knows the location and nature of the physical error and can “route around” it. The IT administrator does not need to get involved in identifying, isolating, or initiating recovery commands to clear the error.

All of these mechanisms are controlled by the end devices through the exchange of capabilities and registration of operations. Fibre Channel switches in the fabric have the visibility of other fabric components that enable them to collect information on the storage network, attached devices, and the overall infrastructure. These switches exchange that information across the fabric, creating a true vision of the autonomous SAN for self-learning, self-optimizing, and self-healing.

Fabric Notifications ultimately deliver intelligence to the devices in the fabric to eliminate wasted energy troubleshooting, analyzing, and resolving issues that get resolved automatically by devices correcting problems that impact performance and failures.

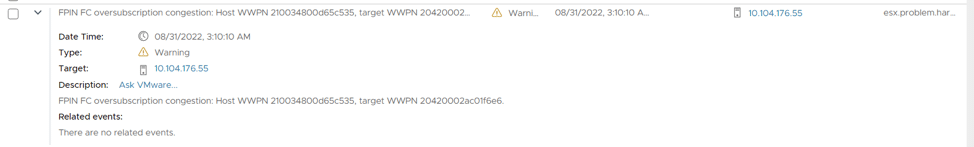

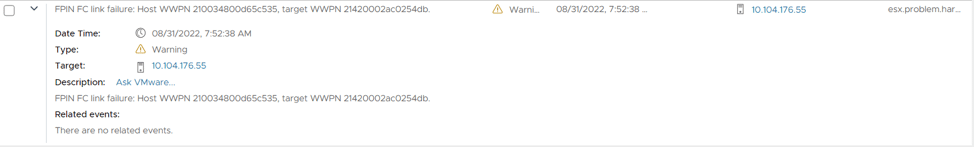

VMware has laid the foundation to deliver an intelligent and self-driving SAN with the process to receive, display, and enable alerts for FC fabric events using Fabric Performance Impact Notification (FPIN), an industry-standard technology.

The FPIN is a notification frame transmitted by a fabric port to notify an end device of a condition for another port in its zone. The conditions include the following:

- Link integrity issues that are degrading performance

- Dropped frame notifications

- Congestion issues

With a proactive notification mechanism, port issues can be resolved quickly, and recovery actions can be set to mitigate downtime.

Figure 1: vSphere 8.0 with Marvell QLogic FC registers for and receives fabric notifications indicting oversubscription in the SAN

Figure 2: vSphere 8.0 with Marvell QLogic FC registers for and receives fabric notifications indicating deteriorating link integrity.

Marvell QLogic Enhanced 16GFC, Enhanced 32GFC, and 64GFC HBAs are fully integrated into vSphere 8.0 and support fabric notifications technology that serves as a building block for autonomous SANs.

Productivity With vVols

VMware has focused on vVols for the last few vSphere releases. With vSphere 8.0, core storage added vVols support for NVMe-oF with FC-NVMe support only in the initial release. However, VMware will continue to validate and support other protocols supported with vSphere NVMe-oF. The new vVols Spec, VASA/VC framework can be found here.

With the industry and many array vendors adding NVMe-oF support for improved performance and lower latency, VMware wanted to ensure vVols remained current with recent storage technologies.

In addition to the improved performance, the NVMe-oF vVols setup is simplified. Once VASA is registered, the underlying setup is completed in the background, and the only thing left to create is the datastore. VASA handles all virtual Protocol Endpoints (vPEs) connections. Customers can now manage NVMe-oF storage arrays in a vVols datastore via storage policy-based management in vCenter. vSphere 8 has also added support for additional namespaces and paths and improved vMotion performance.

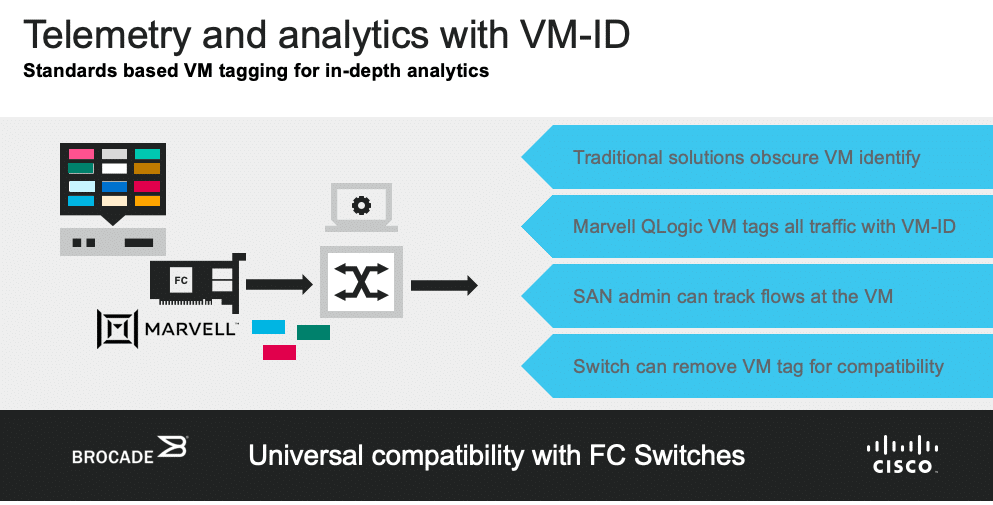

Tracking Virtual Machines With VM-ID Technology

Server virtualization has been the catalyst for increased link sharing, as evidenced by Fibre Channel. With the growing number of virtual machines (VMs) within the data center, shared links transport data associated with CPU cores, memory, and other system resources, utilizing the maximum bandwidth available. Data sent from any VM and other physical systems become intermixed. That data travels along the same path as Storage Area Network (SAN) traffic, so it all appears the same and cannot be viewed as individual data streams.

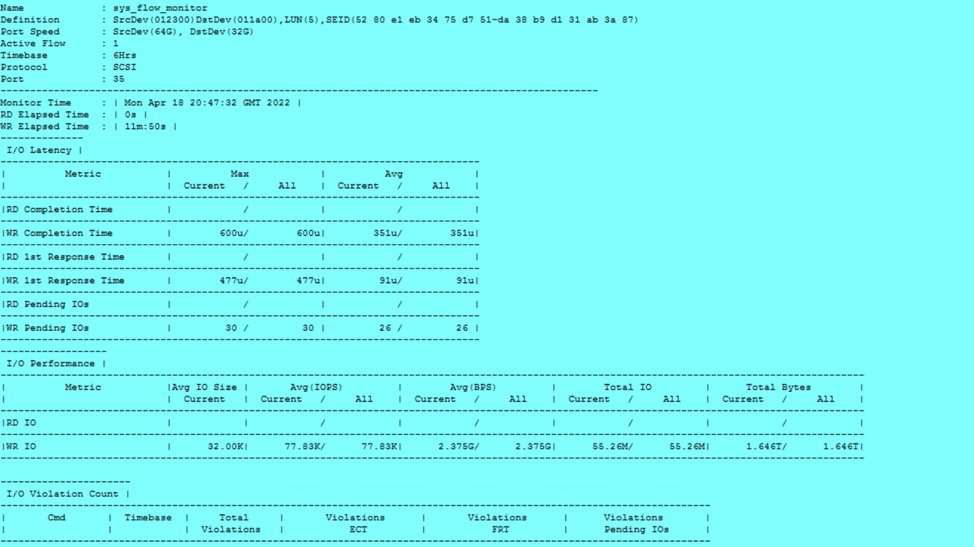

Utilizing Marvell QLogic’s VM-ID (an end-to-end solution using frame tagging to associate the different VMs and their I/O flows across the SAN) makes it possible to decipher each VM on a shared link. QLogic has enabled this ability on their latest 64GFC, Enhanced 32GFC, and Enhanced 16GFC Host Bus Adapters (HBAs). This technology has a built-in Application Services monitor, which gathers the globally unique ID from VMware ESX. It can then interpret the different IDs from every VM to perform intelligent monitoring.

VM-ID brings a deep-level vision for the I/O from the originating VM through the fabric giving SAN managers the ability to control and direct application-level services to each virtual workload within a QLogic Fibre Channel HBA.

Figure 3: Brocade switch analytics engine can now display per VM statistics by counting the Fibre Channel frames tagged with individual VM-ID by the Marvell Fibre Channel HBA.

Increasing Performance With 64GFC

Advancements in Fibre Channel have been ongoing since the protocol was first started in 1988. The first FC SAN products, 1Gb FC, began shipping in 1997, and the evolution continues today, with 128Gb products on the horizon.

Every three to four years, FC speeds double. In addition to the advancements in increased performance, new services like Fabric Services, StorFusion™ with Universal SAN Congestion Mitigation, NPIV (Virtualization), and Cloud services were included. Networking companies and OEMs participate in developing these standards and continue working together to deliver reliable and scalable storage network products.

Fibre Channel is considered the most reliable storage connectivity solution on the market, with a heritage for delivering progressive enhancements. Server and storage technologies are pushing the demand for greater SAN bandwidth. Application and storage capacity, 32Gb and 64Gb storage arrays supporting SSDs and NVMe, server virtualization, and multi-cloud deployments are proving Fibre Channels worth as it delivers higher throughput, lower latency, and greater link speeds—all with predictable performance.

Marvell recently announced the introduction of its all-new 64GFC HBAs. These include the QLE2870 Series of single-, dual-, and quad-port FC HBAs that double the available bandwidth, work on a faster PCIe 4.0 bus, and concurrently support FC and FC-NVMe, ideal for future-proofing mission-critical enterprise applications.

NVMe Delivers!

There is no disputing that NVMe devices deliver extremely fast read and write access. So the discussion goes to connecting these NVMe devices to high-speed networks without considering that these are still storage devices that require guaranteed delivery. The technology that was developed as a lossless delivery method is Fibre Channel. In numerous tests by industry leaders, NVMe-oF and NVMe/FC performed better when the underlying technology was Fibre Channel.

Flash arrays allow for faster block storage performance in high-density virtualized workloads and reduce data-intensive application response time. This all looks pretty good unless the network infrastructure cannot perform at the same level as the flash storage arrays.

Flash-based storage demands a deterministic, low-latency infrastructure. Other storage networking architectures often increase latency, creating bottlenecks and network congestion. More packets must be sent when this occurs, creating even more congestion. With Channel’s credit-based flow control, data can be delivered as fast as the destination buffer can receive it, with dropping packets or forcing retransmissions.

We posted an in-depth review earlier this year on Marvell’s FC-NVMe approach. To learn more, check out Marvell Doubles Down on FC-NVMe.

Core NVMe Storage Features First Introduced in vSphere 7.0

A VMware blog described NVMe over Fabrics (NVMe-oF) as a protocol specification that connects hosts to high-speed flash storage via network fabrics using NVMe protocol. VMware introduced NVMe-oF in vSphere 7 U1. The VMware blog indicated that benchmark results showed that Fibre Channel (FC-NVMe) consistently outperformed SCSI FCP in vSphere virtualized environments, providing higher throughput and lower latency. Support for NVMe over TCP/IP was added to vSphere 7.0 U3.

Based on growing NVMe adoption, VMware added support for shared NVMe storage using NVMe-oF. Given the inherent low latency and high throughput, industries are taking advantage of NVMe for AI, ML, and IT workloads. Typically, NVMe used a local PCIe bus, making it difficult to connect to an external array. At the time, the industry had been advancing external connectivity options for NVMe-oF based on IP and FC.

In vSphere 7, VMware added support for shared NVMe storage using NVMe-oF with NVMe over FC and NVMe over RDMA.

Fabrics continue to offer greater speeds while maintaining the lossless, guaranteed delivery required by storage area networks. vSphere 8.0 supports 64GFC, the fastest FC speed to date.

vSphere 8.0 Advances Its NVMe Focus, Prioritizing Fibre Channel

vVols has been the primary focus of VMware storage engineering for the last few releases, and with vSphere 8.0, core storage has added vVols support in NVMe-oF. Initially, VMware will support only FC but will continue to validate and support other NVMe-oF protocols.

This is a new vVols Spec, VASA/VC framework. To learn more, visit VASA 4.0/vVols 3.0 to view details of this new vVols spec.

vSphere 8 continues to add features and enhancements and recently increased the supported namespaces to 256 and paths to 2K for NVMe-FC and TCP. Another feature, reservation command support for NVMe devices, has been added to vSphere. Reservation commands let customers use Clustered VMDK capability with Microsoft WSFC with NVMe-oF Datastores.

Simple To Set Up And Simple To Manage!

Fibre Channel has another built-in efficiency: Auto-discovery. When an FC device is connected to the network, it is auto-discovered and added to the fabric if it has the necessary credentials. The node map gets updated, and traffic can pass across the fiber. It is a simple process with no intervention from an administrator.

There is more overhead when implementing NVMe/TCP. Because NVMe/TCP does not have an auto-discovery mechanism, ESXi has added NVMe Discovery Service support. Advanced NVMe-oF Discovery Service support in ESXi enables dynamic discovery of standards-compliant NVMe Discovery Service. ESXi uses the mDNS/DNS-SD service to obtain information such as the IP address and port number of active NVMe-oF discovery services on the network. ESXi sends a multicast DNS (mDNS) query requesting information from entities providing (NVMe) discovery service (DNS-SD). If such an entity is active on the network (on which the query was sent), it will send a (unicast) response to the host with the requested information, i.e., IP address and port number where the service is running.

Conclusion

Fibre Channel was purpose-built to carry block storage, and as outlined in this article, it is a low-latency, lossless, high-performance, reliable fabric. To be clear, strides are being made to improve the use of TCP for storage traffic across some significant high-speed networks. But the fact remains that TCP is not a lossless network, and data retransmission is still a concern.

Marvell FC Product Family

This report is sponsored by Marvell. All views and opinions expressed in this report are based on our unbiased view of the product(s) under consideration.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed

[ad_2]

Source link